Many believe the renewed U.S. public investment in determining what treatments work best for which patients in real-world settings—known as comparative effectiveness research (CER)—will improve patient care by strengthening the evidence base for medical decisions. A major goal of CER is to encourage the use of effective therapies and discourage ineffective therapies. By promoting effective therapies, CER stands to increase the rewards and incentives for beneficial innovations in medical care. However, CER could dampen development of new, potentially effective therapies by creating additional hurdles for innovators. Policy makers can take steps to ensure that CER encourages beneficial innovation by creating a stable, transparent and predictable environment to compare therapies and setting explicit time frames for evaluation that are in sync with the innovation process. Policy makers and payers also could ease patient access to promising therapies when relative effectiveness is unknown by extending coverage with evidence development and sharing financial risk with innovators. Finally, policy makers will need to develop new ways to engage clinicians and patients to apply CER findings in their own practices and medical decisions.

- Renewed Commitment to Comparative Effectiveness Research

- Building an Evidence Base

- From CER Theory to Reality

- CER and Different Classes of Innovation

- CER and Different Phases of Innovation

- Incremental vs. Transformational Innovation

- Predictability in Generating and Interpreting CER Results

- Policy Options to Foster Beneficial Innovation

- Staying the Course Despite Uncertainties

- Notes

- Data Source

Renewed Commitment to Comparative Effectiveness Research

Building on a $1.1 billion investment in comparative effectiveness research in the 2009 economic stimulus legislation, the recently enacted health care reform law, known as the Patient Protection and Affordable Care Act (PPACA),1 creates a formal structure to guide publicly financed CER going forward. This public investment in CER reflects policy makers’ hopes that promoting the use of more effective therapies and discouraging those that are unduly risky or ineffective will enhance health.

The law creates a private, nonprofit corporation—the Patient-Centered Outcomes Research Institute (PCORI)—whose purpose is to help patients, clinicians, purchasers and policy makers make “informed health decisions by advancing the quality and relevance of evidence” of various approaches to preventing, diagnosing, treating, monitoring and managing diseases and other health conditions. The institute will identify comparative effectiveness research priorities, establish a research agenda, adopt methodological standards and administer a federal trust fund dedicated to CER.

Building an Evidence Base

Despite remarkable scientific and technological advances, the practice of medicine remains as much an art as a science, with a significant proportion of patient care lacking a solid evidence base.2 Many view comparative effectiveness research as a tool that clinicians and patients can use to select safer, more effective—and perhaps more cost-effective—therapies. This growing interest and public investment in CER reflect the hope that a better evidence base will yield better health outcomes and possibly higher-value health spending. The underlying premises are that:

- At least in some cases, comparing one treatment approach to others will demonstrate that one is superior for certain patients under certain circumstances or that a less-expensive alternative is equally effective.

- Payers and regulators will provide incentives and support for use of CER evidence by clinicians and patients—for example, through the use of shared-decision models or by guiding and informing benefit design.

- Comparative effectiveness research findings will be convincing enough to be adopted by clinicians and patients and substantially change patterns of care, resulting in better patient outcomes and possibly reduced health care spending growth.

From CER Theory to Reality

In reality, constructive application of comparative effectiveness research findings is by no means certain. In many cases, the implications of research findings may not be clear cut for a given clinical decision at a particular moment. Patients and clinicians weighing whether to change a treatment plan in light of CER findings will encounter much uncertainty. They may not know whether previous CER study results remain relevant, particularly if a new product with competing claims has entered the market. They may doubt whether the CER findings based on early use of a treatment truly apply to particular clinicians or facilities with extensive experience in more recent refinements. They may wonder whether the findings apply only to the specific disease stage and patient population described in the study or can be extrapolated to a broader range of clinical indications and patients.

Clinicians, innovators who develop new therapies and researchers who study new therapies may be uncertain about how CER should affect their behavior. They may respond to CER studies by recognizing that some novel therapies offer little real benefit and—appropriately—abandoning them. In other cases, innovators and researchers may argue that the timing or methodology of CER studies cannot capture the potential—but still uncertain—value of innovations, forcing them to abandon promising therapies prematurely. Lastly, some stakeholders have expressed concerns that increased use of CER to inform clinical decisions could cause harm by holding innovative therapies to an inappropriate or onerous standard of effectiveness and discouraging their development and diffusion.

With the PPACA’s enactment, many decisions already have been made to minimize risks to innovation from comparative effectiveness research. For example, the law explicitly bars the PCORI from mandating coverage, reimbursement or other policies for any public or private payer. And, the institute is specifically directed to ensure that “research findings not be construed as mandates for practice guidelines, coverage recommendations, payment, or policy recommendations.”3 However, concerns about potential negative effects of CER on innovation persist among stakeholders,4 and many important decisions remain. This analysis seeks to inform upcoming discussions by identifying policy approaches that can support beneficial innovation and deter wasteful or harmful innovation, drawing on the expertise of consumer advocates; clinical researchers; innovators in the areas of pharmaceuticals, medical devices, surgical procedures and nonsurgical clinical care; leaders at research funding agencies, regulatory agencies, public insurance programs and private insurance firms; and experts in health economics (see Data Source).

The first part of this analysis reviews the potential pathways by which CER could alter the development of innovative therapies and how CER’s influence may vary depending on the nature of the therapy in question. In particular, the effect of the class of therapy involved, the development phase when CER is brought to bear, and the potential impact of CER on innovation—incremental vs. transformational—are reviewed. The second part of this analysis reviews potential policies related to the federal investment in CER—both PCORI and other areas of federal investment or influence—that could offer the greatest support for socially beneficial innovation, where benefits to a population outweigh costs regardless of the spending required.5

CER and Different Classes of Innovation

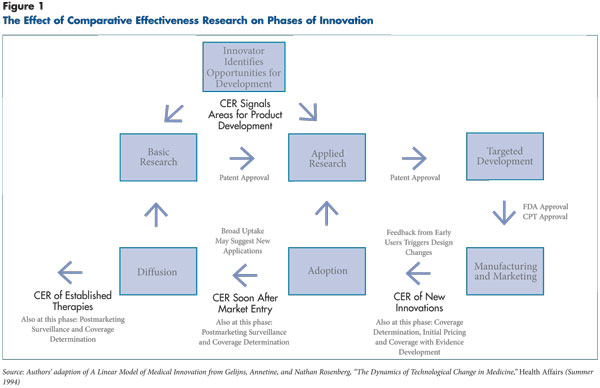

Even though comparative effectiveness research most likely will focus on treatments already in wide use, CER also may affect the development of new therapies before or at the point of market entry. As a new therapy is formulated, innovators accumulate data that can suggest whether the therapy ultimately will prove more effective than existing alternatives (see Figure 1). This information may lead developers to modify the innovation or abandon it. However, different types of therapies develop in different ways, and a research question that is simple for one class of therapy may be complex for another.

Comparative effectiveness research will not always compare like-to-like treatments—for example, drug-to-drug comparisons—but will sometimes compare different classes of interventions—such as surgery vs. drug therapy—that take different paths through the innovation process.

A new pharmaceutical, for example, may spend years in the preliminary phases of basic and applied research, followed by formal Food and Drug Administration (FDA) approval, but then move quickly through marketing and adoption without further study or alteration. A medical device may cycle repeatedly through the steps of applied research, targeted development and marketing as users suggest modifications that may significantly change effectiveness. A surgical procedure or a nonsurgical clinical service may diffuse less formally, without a discrete approval process or traditional marketing. Procedures and services can be modified by individual clinicians on a continuous basis, possibly resulting in significant variation in effectiveness.

Drugs and devices are regulated before market entry, but most other innovations are not. Currently, surgical innovators work with little external oversight and few regulatory requirements for evidence collection before disseminating new procedures. The expectation that surgeons would have to develop procedures that can withstand the test of CER could significantly change how surgical innovators identify and develop new procedures—a more profound change than for innovators in other fields where evidence of safety and efficacy is required before widespread patient use. Moreover, no consistent, national oversight exists of clinical procedures in development.

Clinical researchers reported a lack of clarity regarding when the involvement of institutional review boards is needed to monitor the development of new procedures, to say nothing of the adaptation of existing therapies. Some procedures and services undergo evaluation by the American Medical Association’s Current Procedural Terminology (CPT) Editorial Panel to obtain a dedicated CPT code for insurance billing purposes. In other cases, providers elect to submit claims under an existing CPT code for a similar service. By the time payers become aware that a newer, less well-established product or service is coming into wider use, such a treatment has diffused into the market and payers must determine whether and how they will cover it. Payers reported that despite their horizon-scanning programs, it can be difficult to detect when a clinically important new variation of a diagnostic test or clinical procedure is entering their local market unless and until assignment of a new CPT code.

An initial component of the PCORI research agenda might be simply to clarify, for therapeutic classes that currently face few or inconsistent evidence requirements, what treatments are in development or in use. An effort like this, such as the planned “registry of registries” proposed by the Agency for Healthcare Research and Quality (AHRQ), would help ensure that CER evaluations of alternative therapies do not systematically omit some interventions and that all relevant therapies are evaluated. Such a registry could help mitigate claims, for example, of proponents of surgical treatments for a condition that also can be managed medically that their approach would have been found just as effective if it had only been included in the study. Another benefit might be to ensure that studies of medical and surgical treatments do not routinely omit less-risky or less-costly alternatives, such as physical therapy or behavior modification programs.

Figure 1 – The effect of comparative effectiveness rersearch on phases of innovation

CER and Different Phases of Innovation

The impact of comparative effectiveness research on an innovative therapy depends on what stage in the innovation’s life cycle the research is undertaken. Assessing therapies early in development would have an immediate impact but would omit the vast array of treatments already in use. By the time a therapy has diffused widely, however, investments in CER may have more limited returns. Waiting until a device or procedure enters into wide use allows providers time to develop expertise and correct any early problems before an evaluation is conducted, but they may become familiar with and, perhaps, reliant on the new therapy despite the lack of strong evidence of effectiveness.

CER proponents contend that patients and clinicians are hungry for information to guide treatment decisions and that simply making better information available could be sufficient to drive changes in practice. In a few important cases—for example, the use of hormone replacement therapy in post-menopausal women, which dropped dramatically after evidence of its adverse effects was released—research findings have led to dramatic changes in treatment patterns for widely diffused therapies. In general, however, consumers are skeptical of evidence-based medicine,6 resistant to changes in recommendations for existing therapies, and susceptible to influence from stakeholders who may have reputational or financial investments in those treatments. This reality was dramatically illustrated in 2009 by the public outcry about U.S. Preventive Services Task Force recommendations for women to delay routine screening mammography until age 50.7

Similarly, clinicians can be resistant to abandoning familiar therapies of questionable benefit. For example, the use of spinal fusion surgeries for chronic neck and back pain has continued to rise despite evidence questioning their effectiveness in many cases.8 Not surprisingly, payers are reluctant to withdraw coverage, even for relatively ineffective therapies in wide use in countries with well-established CER programs.9 In the United States, insurers also must consider local market constraints and their need to maintain adequate provider networks—which may require more compromises in some communities than in others—potentially creating additional inconsistency in incorporating CER findings into coverage decisions, benefit design or provider payment policies.

Incremental vs. Transformational Innovation

As innovators try to understand how comparative effectiveness findings may change the treatment landscape, they may distinguish between incremental innovations, which offer measurable but small improvements over the current standard of therapy, and transformational innovations, which offer more significant benefits or even change the therapeutic approach altogether. Incremental innovation is not necessarily inferior—a series of incremental changes eventually may have the same effect as a single transformational innovation and may be easier to achieve. On the other hand, incremental innovations also can provide marginal benefit at significant cost.

In response to CER programs abroad, multinational biotechnology firms reportedly focus more on developing transformational treatments than on “me-too” products, seeking innovations with the potential for increased effectiveness large enough to secure higher prices. “Incremental [innovation] is seen as a less-good investment,” said an executive at a large pharmaceutical company. “Because incremental innovation, in a CER world, has a higher risk that it won’t be judged, be it by a formulary committee or quasi-government body, to provide sufficient value to make investment or reimbursement [in that product] worthwhile. So a [transformative] project might be riskier to start with, but getting and maintaining funding is a more stable venture.”

The effect of widespread CER on the balance of incremental and transformational innovation depends on how CER findings ultimately are used in the U.S. health care system. Unlike CER programs in other countries, the PCORI has no authority to determine relative cost effectiveness or directly inform any national system of coverage determination for any clinical product or service. In contrast, England and Wales’ National Institute for Health and Clinical Excellence (NICE) evaluates a new therapy not just against the benefits but also the price of its nearest competitor. As a result, innovators proposing a new product that offers few marginal benefits would have difficulty defending a significant price increase. In the United States, innovators face no such immediate constraints. Medicare officials are expressly prohibited from using PCORI research findings as the sole factor to make coverage decisions. Moreover, PCORI lacks the authority to set or negotiate prices for therapies—another mechanism for encouraging transformative innovations—in contrast to some of its European counterparts.

Although U.S. law deliberately avoids tying relative comparative effectiveness to coverage and pricing decisions, several factors may encourage pricing that more accurately reflects the degree of benefit offered by each therapy over the long term. First, U.S. private payers—unlike their public counterparts—are free to use CER information in payment and coverage policy. Second, treatments that offer only incremental improvement over competitors may be more quickly dominated by a superior alternative than products with transformational benefits. However, U.S. providers could interpret the current public promotion of CER as a mandate to choose the treatment option with the greatest absolute effectiveness, regardless of cost. If this viewpoint were widely adopted, incremental innovations might be extremely profitable in the short run.

The disincentive for incremental innovation seen in some European markets is unlikely to occur under the model for publicly supported CER in the United States. However, more effort may be necessary to encourage transformational innovation by augmenting current incentives with academic funding or research prizes aimed specifically at transformational ideas. With little influence over how providers and private payers will weigh cost in their evaluation of comparative effectiveness, U.S. policy makers may struggle to reach the optimal balance.

Predictability in Generating and Interpreting CER Results

Innovators’ concerns about the risks associated with comparative effectiveness research might seem ironic, given that research and development is itself such a risky endeavor. However, professional innovators—particularly large firms—manage the hazard associated with research carefully, in the same way a “buy-and-hold” investor might weigh the risks when choosing investments. These firms make multiple research bets many years in advance, knowing that some will fail but counting on their extensive knowledge of markets and their understanding of the approval process to guarantee at least a few big winners. As one innovator said, “Industry can’t deal with inconsistency.”

Innovators’ desire for a stable environment that allows calculated risk taking when identifying future investments might create an opportunity for policy makers to solidify evidentiary standards for CER. For example, innovators might be more supportive of rigorous standards for CER evaluations if they were given clear evidentiary standards and were confident the standards would remain consistent for a guaranteed period.

Policy Options to Foster Beneficial Innovation

Policy can play a role in how comparative effectiveness research influences the development of innovative therapies. Policy makers might consider the following priority areas when crafting an overall strategy that optimizes the influence of CER on innovation.

The role of policy in promoting societal priorities. Policy makers can send clear signals about socially beneficial innovation for particular patient populations—areas where the current treatment does not sufficiently address the suffering associated with a condition, carries a high risk, or is unusually costly or burdensome—and where potential for improvement is great. CER findings could be translated not just into guidance for consumers and payers but also into guidance for innovators seeking to understand new, effectiveness-driven markets. “If it [a public CER entity] sets more…standards or conventions about what is ‘effective’ that weren’t in place before, that will influence the pipeline and investment in the pipeline,” a private insurance executive said. “Investors, given multiple opportunities to invest, will then give increasing preference to schemas that have lower [CE] hurdles to overcome for innovation and development…where…analytics are less stringent or where there is more societal pressure to have treatment (e.g. cancer) to implement innovation regardless of the existence of big effectiveness studies.”

The importance of stability. Policy guidance will be most helpful to innovators if the stated priorities remain relatively stable over the multi-year period in which innovators typically develop and refine new ideas. For example, setting research priorities with a five-year time frame would more effectively encourage innovators to invest in high-priority areas than revisiting priorities on an annual basis. Combining generous time frames with ongoing horizon-scanning activities would allow policy makers to add important new priorities while maintaining stable support for already-identified priorities.

Setting consistent evidence standards. The Patient-Centered Outcomes Research Institute, along with such entities as the U.S. Department of Health and Human Services (HHS) and the American Medical Association, could foster the use of comparative effectiveness research to promote beneficial innovation. Possible mechanisms include broadening the role of institutional review boards and setting guidelines for when new clinical approaches should be introduced as part of a research study—with standardized data collection requirements and appropriate protection for patient-subjects. Other approaches include raising the bar on evidence requirements for CPT consideration and creating incentives for innovators to register new therapies to better detect and track the development of surgical and other clinical services.

Setting consistent evidence standards may require new regulation of how therapies other than drugs or devices enter the marketplace. In many cases, the current process for granting CPT codes for new services may begin too late in the innovation process—after formal or informal experimentation and wide use—to either systematically leverage data from early clinical experience for subsequent CER studies or ensure that the evidence of the therapy’s efficacy and effectiveness meets minimum standards before patients are subjected to the risks of treatment.

From the perspective of enabling efficient and consistent CER, the current lack of regulation results in many lost opportunities. For example, a comparative effectiveness study evaluating approaches to the management of atrial fibrillation could easily compare pharmacological approaches to rate control and rhythm control, since accurate prescription records will be available in pharmacy claims data and electronic health records of large integrated health systems. However, it could be much more difficult to include an alternative procedure, such as an ablation where an electrophysiologist burns away selected areas of heart tissue that may be triggering abnormal heart rhythms, in this comparative assessment, given that providers may perform different variations of the procedure, which claims data may not reflect. Innovators who develop new procedures may elect to bill for them under existing codes; these new procedures (and their effectiveness) will then be impossible to distinguish using claims.

It will be important to balance the benefits of capturing as much information as possible about new treatments against the costs of creating barriers to promising innovations. For example, hospitals might be reluctant to adopt relatively benign and noninvasive innovations, such as checklists, if they were required to engage in formal evidence-gathering beforehand. Policy makers may wish to consider where to draw the line between innovations that will require supporting data and tracking and those that do not. Perhaps the differentiating consideration should be that more systematic data collection be required of new therapies that put patients at greater risk, are particularly expensive or are exotic compared to existing treatments—for example, performing a surgery with robotics that is already being performed successfully without robotics. This may well increase the evidence burden for some transformational therapies more so than for incremental ones, but only to a degree commensurate with the relative risks and costs posed to patients and society. That is, it may be appropriate to use CER to apply the brakes in a tempered way to therapies that currently face insufficient scrutiny, because discouraging non-beneficial or even harmful innovations is an important goal.

Encouraging providers and patients to participate in CER. Improving the infrastructure to collect and share data to the point where providers can submit data passively, without additional effort, can help payers and researchers gather more information about innovative therapies without limiting patients’ access. For example, data warehouses that collect information from multiple electronic health records could become repositories of tremendously valuable research data. Such approaches will need to address patients’ privacy concerns in a meaningful way because many will be uncomfortable with the idea of their health care data being used in research.

As an executive from a large private employer noted, “There needs to be public stakeholders involved when you’re thinking about this, so that consumer groups are engaged at the front end, so they’re not saying, ‘Why is this evil new black helicopter group getting my data?’”

At the same time, it would be neither feasible nor ethically necessary to obtain formal consent for tens of thousands of patients for the purpose of a retrospective analysis. Ensuring that researchers can gain access to appropriate data will require not only reassessing current legal limitations on sharing information, but also addressing patients’ perceptions of what research participation means and how data are used (see box below for more information about barriers to patient participation in research).

Supporting personalized medicine. Among the criticisms leveled against comparative effectiveness research is that it could lead to a one-size-fits-all approach to medical care where the most effective treatment for the average patient is promoted at the expense of accommodating individual variation. In fact, a thoughtful CER program can support personalized medicine by sponsoring studies of real-world effectiveness for a broad range of patients, whether through strengthening methods for large observational studies (a potential function of the PCORI methodology committee) or an investment in broader, more representative randomized clinical trials. Policy makers also can support personalized medicine by promoting statistically appropriate analyses of differential responses to treatment across specific subgroups as the health reform law directs the PCORI to do. For example, facilitating the creation and maintenance of large, interoperable data networks may help researchers find enough patients to generate valid conclusions about specific subgroups. And policy makers can encourage the participation of diverse populations in studies, particularly for large, community-based trials.

Acknowledging uncertainty. Even the most carefully crafted comparative effectiveness research program cannot offer solutions for every clinical scenario. “Almost every [research] question is fluid,” one health services researcher said. “Games change mid-stream.” Inevitably some research findings will prove misleading or difficult to reproduce, and patients and clinicians may still face equivocal or contradictory studies.

Innovators also may argue that the results of a CER evaluation do not apply to the newest iteration of their therapy and so should be discounted. “What you never hear anyone talk about in great detail [is] the incredible nuances,” another health services researcher said. “It can make your head spin when you dive down into all the evidence.” Policy makers may wish to consider developing a category of evaluation that specifically acknowledges this uncertainty, by making patients’ access to the new, unproven therapy contingent on their willingness to participate in further data collection, or so-called coverage with evidence development. This may be a necessary mechanism to ensure the success of the large, pragmatic clinical trials that will be vital for CER.

Medicare’s coverage with evidence development program could be a model for such an approach, but it has been severely limited by lack of clear statutory authority and the political difficulties of withdrawing reimbursement for widely used therapies even after demonstration that they are not as effective as other therapies. In practice, coverage with evidence development has been limited to therapies that are new to market and have not yet built up a large base of stakeholders that might protest their subsequent withdrawal. Private payers are similarly reluctant to use this approach. One participant described insurers’ hesitancy to withdraw coverage, saying, “No one revisits coverage decisions. We never stop covering.”

Private payers are freer than their public counterparts to base coverage decisions on CER results, although they may remain reluctant to do so. A few national payers in the European Union and elsewhere have negotiated novel shared-risk coverage programs. Under this approach, manufacturers or providers of a therapy with uncertain benefit or whose certain benefit is limited to an unpredictable subset of patients have alternative payment arrangements. These models, in which patients have access to a treatment but the payer has reduced financial liability—for example, the insurer only pays for the service when the treatment proves effective for a particular patient—could be more palatable to innovators and patients than outright restrictions on access.

Encouraging patients and clinicians to understand and use CER. When comparative effectiveness research results in a clear finding of inferiority for an established therapy, payers and policy makers may find patients and clinicians unwilling to abandon the therapy because they believe it works for them. “[Abandoning an existing therapy] is rarely done because of politics,” a health services researcher said. “A lot of [providers and manufacturers] don’t want the research done. As long as they are earning money off a service, they don’t want to know if it is working well or not.”

In the current medical and political environment, it may simply be unrealistic to expect that CER findings will turn patients and clinicians away from an accepted therapy. For example, despite well-established evidence that beta-blockers and hydrochlorothiazide are as or more effective than alternative treatments for high-blood pressure, and in the absence of direct financial incentives for prescribing newer and more expensive drugs, many clinicians and patients still do not select the older drugs as first-line therapies. Assessing therapies soon after they enter the market, before patients and providers grow accustomed to them and before providers, in some cases, become financially reliant on them, could avoid this problem.

“We need to move CER way upstream, before we start adopting technology and procedures and teaching the public what is the best way to do something and then expecting them to change their mind and accept science,” a consumer advocate said. “Beliefs will trump facts every time.”

But this approach could not address the many therapies already in wide use that prove to be relatively ineffective. It would also require evaluating therapies early in their clinical application, when their optimal role may not yet be fully understood. Innovators would inevitably argue—at times correctly—that early evaluations could place newer therapies at a disadvantage. Since research comparing established therapies will likely consume the bulk of CER funding, substantial new research in methods of communicating information and motivating behavior change will be needed to identify policy levers to change patient and clinician behavior when CER findings contradict their perceptions of a treatment’s effectiveness.

Leveraging public investments in CER. As a congressionally chartered but private, nonprofit corporation with broad stakeholder representation but no executive powers, the PCORI can only offer guidance to other CER funders about how they should evaluate research methods. Likewise, PCORI can offer guidance on how patients and private payers should evaluate CER findings, however funded. By this means, innovators who apply PCORI-endorsed methods when evaluating an innovation might be rewarded by having their findings recognized as credible by the widest possible audience. Convincing private CER funders to adhere to common standards, or convincing insurers that well-conducted CER studies are strong enough to support even unpopular coverage decisions, will require some reassurance that the standards themselves are stable.

Without positioning itself to affect—in some way—the work of privately funded researchers, PCORI may have little power to encourage development of beneficial innovations or to discourage innovations of little value. The estimated $600 million available though the trust fund annually will be insufficient to fund more than a few practical clinical trials on high-priority CER topics. Setting credible best practices and standards for methods and research conduct likely would have much broader influence on other CER funders.

In addition to encouraging meaningful private investments in CER, harmonizing the PCORI’s activities with other federal research investments could aid innovation as well. HHS is funding an inventory of CER designed to improve information-sharing and ultimately limit redundancies. Relevant federal research entities, such as AHRQ, the National Institutes of Health and the Department of Veterans Affairs, could incorporate PCORI priorities into their broader funding programs and review policies. Additional policy work may be required to ascertain how best to coordinate the work of federal agencies with relevant PCORI interests in methods development, guidance and public dissemination of CER results.

Importance of Patient Participation in Randomized Controlled Trials

If comparative effectiveness research is to be supported by large pragmatic trials in real-world settings, policies need to address existing barriers to adequate patient participation in research. Recent legislation has emphasized the need for CER to be patient centered, but many current U.S. clinical trials have great difficulty recruiting patients to participate.10

Researchers seeking to attract patients to their research can first ensure that the study is well designed and addresses an important clinical question. However, participation also requires that the patient is aware of the study, can navigate the registration process, is clinically eligible, and is willing and able to adhere to study protocols with the attendant inconvenience. Another challenge for CER is that studies will often compare outcomes of treatments that are already available. Even if a clinician were willing to participate in the study and offer the treatments of interest to researchers, and even if insurers were willing to simplify relevant payment and copayments—both uncommon circumstances in the current world of clinical research—patients may be less motivated to participate if they can access innovative therapies elsewhere or avoid the randomized design with its risk that they may not get a treatment they prefer.

PCORI, along with relevant federal agencies, could support researchers in attracting more patients—particularly groups that historically have been underrepresented in research trials—in a variety of ways. Research indicates that many patients who are reluctant to participate in trials are unfamiliar with many of the fundamental concepts underlying clinical research, including randomization, blinding and placebo controls, and may doubt the effectiveness of current law on human subjects’ protection.11 Explaining the scientific background and rationale for a particular clinical research study, ensuring that patients can understand it in the context of the scientific method, and addressing patients’ pre-existing concerns and beliefs about participation in clinical trials is a time-consuming process that requires trained, experienced staff. PCORI could support the development of shared resources, such as educational media and trainers, to inform and educate the public about participating in clinical trials. Federal agencies sponsoring research studies could ensure that funding is adequate to address many of the barriers facing low-income patients, such as lack of reliable transportation or difficulty managing other medical needs.

Staying the Course Despite Uncertainties

Comparative effectiveness research guidance will not be issued in the form of revealed truths. The findings of CER studies likely will raise as many questions as they answer, and their results will be open to constant questioning and reinterpretation. As one health services researcher said, “I’ve been involved in health services research for 35 years, and the more I do the humbler I get. I think there’s a sense out there that the answers are hidden. They maybe have to be dug out, but when we get them, we’ve got them. The world doesn’t work that way.”

However, well-designed CER policies can promote beneficial innovations and discourage development of treatments with relatively little benefit. The potential effects of CER on innovation are far from universally harmful and may even promote innovation under certain circumstances. The complexity and uncertainty of innovation may be such that no policy can ensure that every beneficial innovation is promoted and protected. This could prove a political weakness for CER unless thoughtfully addressed. Understanding consumers’ beliefs about innovation and providing better access to participate in clinical trials of promising innovations might help address their concerns. CER policies that clearly state how access to innovation fits into societal values may help to amplify the effect of CER on patient and clinician decisions.

Notes

1. Patient Protection and Affordable Care Act (Public Law No. 111-148), Section 6301.

2. The Cochran Collaboration, http://www.cochrane.org/faq/cochrane-collaboration-estimates-only-10-35-medical-care-based-rcts-what-information-based (accessed Aug. 23, 2010).

3. Ibid.

4. American Academy of Orthopedic Surgeons, “Comparative Effectiveness Research,” Position Statement, Doc. No. 1178 (December 2009); American Urological Association, testimony submitted to the Federal Coordinating Council on Comparative Effectiveness Research, U.S. Department of Health and Human Services (June 10, 2009).

5. Cutler, David M., et al., “The Value of Antihypertensive Drugs: A Perspective on Medical Innovation,” Health Affairs, Vol. 26, No. 1 (January/February 2007).

6. Carman, Kristin L., et al., “Evidence that Consumers Are Skeptical about Evidence-based Health Care,” Health Affairs, Vol. 29, No. 7 (July 2010).

7. Szabo, Liz, “Mammogram Coverage Won’t Change, Companies Say,” USA Today (Nov. 19, 2009).

8. Deyo, Richard A., Alf Nachemson and Sohail K. Mirza, “Fusion Surgery—The Case for Restraint,” New England Journal of Medicine, Vol. 350, No. 7 (Feb. 12, 2004); Deyo, Richard, et al., “Overtreating Chronic Pain: Time to Back Off?” Journal of the American Board of Family Medicine, Vol. 22, No. 1 (January/February 2009).

9. Elshaug, Adam G., et al., “Challenges in Australian Policy Processes for Disinvestment from Existing, Ineffective Health Care Practices,” Australia & New Zealand Health Policy, Vol. 4, No. 23 (October 2007); Pearson, Steven, and Peter Littlejohns, “Reallocating Resources: How Should the National Institute for Clinical Excellence Guide Disinvestment in the National Health Service?” Journal of Health Services Research and Policy, Vol. 12, No. 3 (July 2007); Neumann, Peter J., Maki S. Kamae and Jennifer A. Palmer, “Medicare’s National Coverage Decisions for Technologies, 1997-2007,” Health Affairs, Vol. 27, No. 6 (November/December 2008).

10. Wujcik , Debra, and Steven N. Wolff, “Recruitment of African Americans to National Oncology Clinical Trials through a Clinical Trial Shared Resource,” Journal of Health Care for the Poor and Underserved, Vol. 1, No. 1 (February 2010).

11. Corbie-Smith, Giselle, Stephen B. Thomas and Diane Marie M. St. George, “Distrust, Race and Research,” Archives of Internal Medicine, Vol. 162 (Nov. 25, 2002).

Data Source

This analysis draws on a literature review, an examination of relevant legislation, and roundtables and interviews in February 2010 with 33 experts to identify comparative effectiveness research policy options that could encourage beneficial innovation at both the patient and societal levels. Participants included consumer advocates; clinical researchers; innovators in the areas of pharmaceuticals, medical devices, surgical procedures and nonsurgical clinical care; leaders at research funding agencies, regulatory agencies, public insurance programs and private insurance firms; and experts in health economics. The roundtables and interviews were cosponsored by the National Institute for Health Care Reform, the Center for Studying Health System Change, the Association of American Medical Colleges and AcademyHealth.